densebody_pytorch

PyTorch implementation of CloudWalk’s recent paper DenseBody.

Note: For most recent updates, please check out the dev branch.

Update on 20190613 A toy dataset has been released to facilitate the reproduction of this project. checkout PREPS.md for details.

Update on 20190826 A pre-trained model (Encoder/Decoder) has been released to facilitate the reproduction of this project.

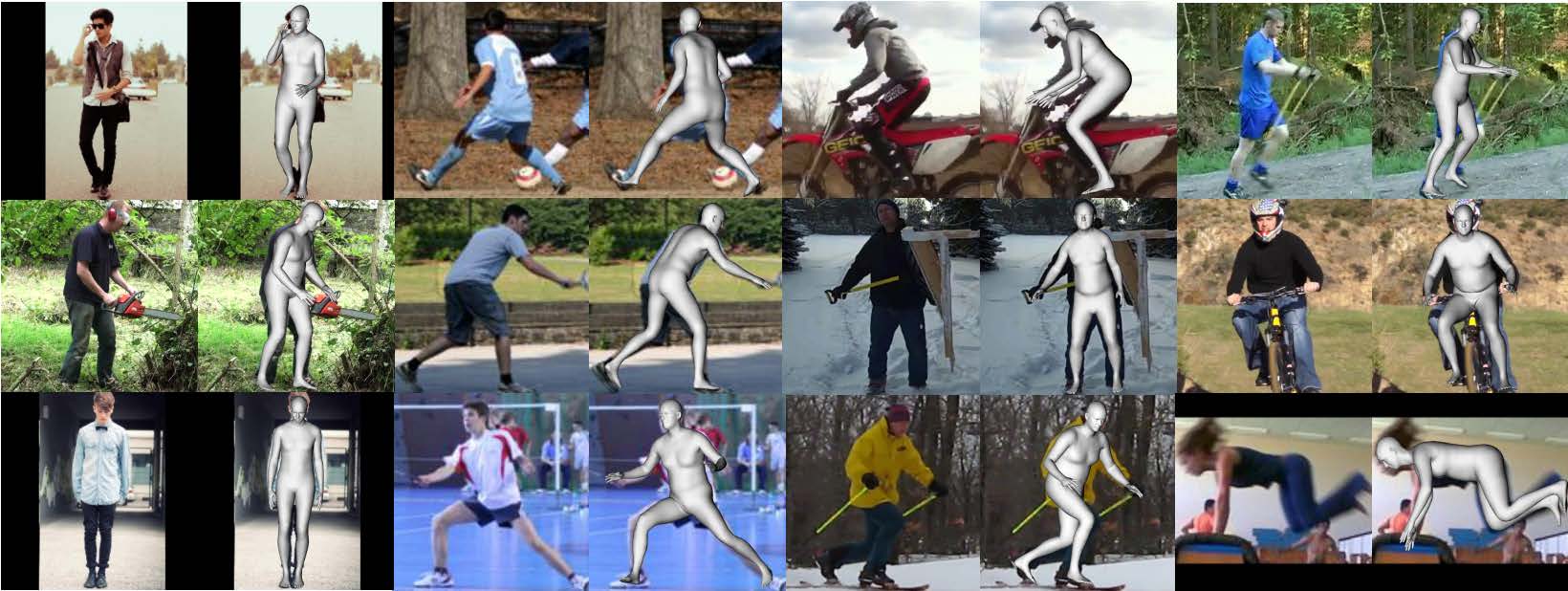

Reproduction results

Here is the reproduction result (left: input image; middle: ground truth UV position map; right: estimated UV position map)

Update Notes

- SMPL official UV map is now supported! Please checkout

PREPS.mdfor details. - Code reformating complete! Please refer to

data_utils/UV_map_generator.pyfor more details. - Thanks Raj Advani for providing new hand crafted UV maps!

Training Guidelines

Please follow the instructions PREPS.md to prepare your training dataset and UV maps. Then run train.sh or nohup_train.sh to begin training.

Customizations

To train with your own UV map, checkout UV_MAPS.md for detailed instructions.

To explore different network architectures, checkout NETWORKS.md for detailed instructions.

TODO List

- Creating ground truth UV position maps for Human36m dataset.

- 20190329 Finish UV data processing.

- 20190331 Align SMPL mesh with input image.

- 20190404 Data washing: Image resize to 256*256 and 2D annotation compensation.

- 20190411 Generate and save UV position map.

- radvani Hand parsed new 3D UV data

- Validity checked with minor artifacts (see results below)

- Making UV_map generation module a separate class.

- 20190413 Prepare ground truth UV maps for washed dataset.

- 20190417 SMPL official UV map supported!

- 20190613 A testing toy dataset has been released!

- Prepare baseline model training

Authors

Lingbo Yang(Lotayou): The owner and maintainer of this repo.

Raj Advani(radvani): Provide several hand-crafted UV maps and many constructive feedbacks.

Citation

Please consider citing the following paper if you find this project useful.

DenseBody: Directly Regressing Dense 3D Human Pose and Shape From a Single Color Image

Acknowledgements

The network training part is inspired by BicycleGAN